Developing Digital Twins for cities requires the integration and synchronization of many technologies, services and large volumes of real-time data (for example, traffic sensors, weather information, or energy control systems). This is where MCP (Model Context Protocol) comes into play, acting as a unified framework for connecting external data and services with language models. By standardizing how applications communicate information to LLMs, MCP enables the seamless orchestration of all data sources and tools required for the digital twin to reflect the city’s current state, facilitate predictive simulations, and enable AI-driven decision-making. As a result, urban management is optimized and innovation is propelled in the smart city ecosystem.

Model Context Protocol (MCP) is an open protocol that standardizes how applications provide context to LLMs. Think of MCP like a USB-C port for AI applications. Just as USB-C provides a standardized way to connect your devices to various peripherals and accessories, MCP provides a standardized way to connect AI models to different data sources and tools.

Table of contents

Open Table of contents

General architecture

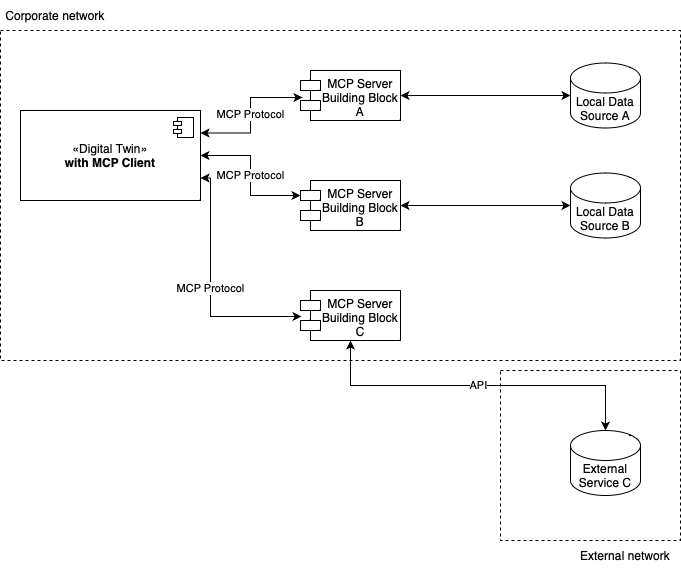

To enable more complex and varied use cases within a Digital Twins environment, it is essential to have a decoupled architecture that allows adding new services or functionalities without altering the main structure. An MCP (Model Context Protocol)-based component precisely serves this purpose, offering a standardized connection point among various data sources, tools, and services.

Overall, this MCP-based approach helps build a flexible and modular Digital Twins ecosystem that can quickly adapt to new challenges and opportunities in the smart city space. Each component, like a “Lego brick,” can be added or replaced without compromising overall functionality, enabling the design of robust and scalable workflows.

MCP Building Blocks

- MCP Servers: these function as lightweight modules exposing specific capabilities of the digital city (e.g., sensor data, traffic systems, or energy control). Each MCP Server integrates with a local or remote data source and publishes its functionality in a standardized way.

- MCP Clients: protocol clients maintaining one-to-one connections with each MCP Server. They can be AI services, development tools, or any other application that needs information from the Digital Twin.

- MCP Hosts: tools like services or other platforms that “orchestrate” communication through the MCP protocol to securely and methodically access information.

Decoupled architecture principle

- Encapsulating functionality: each MCP Server is configured as a “module” that addresses a specific need in the city’s ecosystem (for example air quality sensors, security cameras, lighting control systems), making maintenance and independence easier.

- Easy extension: to add a new use case (for example disaster simulations or mobility pattern analysis), simply introduce a new MCP Server or connect an existing server to a new data source. The rest of the architecture remains unchanged.

- Minimal integration friction: by adhering to a common standard (MCP), the complexity of integrating different services or external platforms is reduced. Each MCP Server acts like a self-contained block that publishes data and receives requests without altering the logic of the other servers.

Data Flow in the Digital Twin

Imagine you have a language model (LLM) that can converse and reason about text, but on its own, it cannot perform certain specialized tasks (for example, getting the latest real-time traffic data or controlling smart street lighting). You need a way for the LLM to “ask for help” from external tools.

The Model Context Protocol (MCP) is, in simple terms, a way for the LLM and external tools to communicate through an intermediate server.

-

Tools as “Services”: the tools (such as a traffic data service, a weather API, or a streetlight controller) are published or exposed on an MCP server. Each tool is described and registered with a name or a way to invoke it.

-

The LLM generates requests: when the LLM realizes during a conversation or reasoning process that it needs assistance from a tool (for example to get current traffic flow on a particular road), it constructs a structured message (often in JSON format) indicating which tool to use and with what parameters.

-

MCP Server receives the request: LLM “speaks” to the MCP server by sending this structured message. The MCP server reads the request and decides which specific tool should handle it.

-

Tool executes the task: MCP server calls the appropriate tool, providing it with the necessary data (for example, the location or the specific city zone) so it can perform its task.

-

MCP server returns the response to the LLM: once the tool finishes its job (for example returning the real-time congestion data), it sends the response back to the MCP server, which then relays the information to the LLM.

-

LLM integrates the result: LLM upon receiving the tool’s response, combines it with its own reasoning and continues the conversation or decision-making process, now enriched by the external data.

MongoDB MCP Server example

MongoDB Lens is a local Model Context Protocol (MCP) server with full featured access to MongoDB databases using natural language via LLMs to perform queries, run aggregations, optimize performance, and more.

MongoDB Lens MCP can be integrated with an LLM in a city environment to centralize and query data from multiple sources (traffic sensors, weather, public services, etc.) in a unified manner. By posing natural language questions, the LLM instructs MongoDB Lens MCP to perform queries, run aggregations on the database, optimize performance, and return clear, actionable answers. This approach helps urban administrators make faster, more accurate decisions based on real-time information, ultimately enhancing efficiency and quality of life throughout the city.

Example tools invocation

Tools in MCP allow servers to expose executable functions that can be invoked by clients and used by LLMs to perform actions. Tools are called from LLM using endpoints, where servers perform the requested operation and return results.

-

“Count all documents in the vehicles collection” ➥ Uses count-documents tool

-

“Find the top 5 intersections with the highest real-time congestion” ➥ Uses find-documents tool

-

“Show aggregated energy consumption data by district” ➥ Uses aggregate-data tool

-

“List all unique types of sensors deployed in the city” ➥ Uses distinct-values tool

-

“Search for incidents containing the word ‘leak’ in their description” ➥ Uses text-search tool