From a software engineering perspective, Architecture Composition enables businesses to design, adjust, and roll out applications promptly through Modules or PBCs (Packaged Business Capabilities).

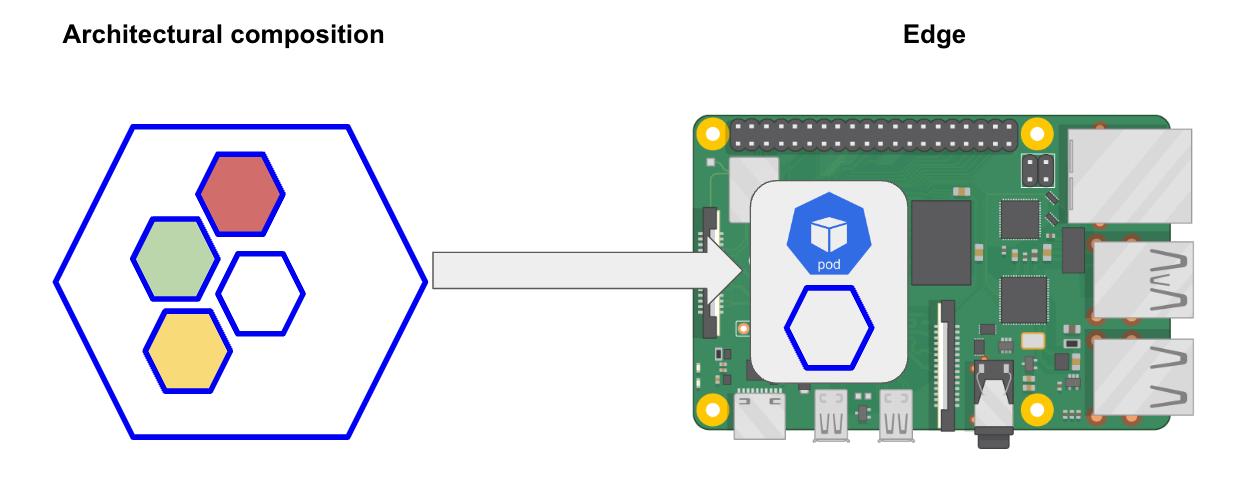

The convergence between Edge Computing and Composable architectures is redefining how applications are deployed and managed. Edge Computing, as we know, allows for data processing closer to the source, meaning on devices or nodes near the data origin, ensuring swift responses and reduced latency. On the other hand, a Composable architecture breaks down applications into individual modules or components that can be assembled, reconfigured, or expanded based on needs. The beauty of this convergence lies in the ability to deploy only specific modules or components of an application at the Edge. This not only maximizes resource efficiency but also allows for adaptability and scalability, letting only the essential functionalities run at the edge, while the rest remain in central data centers or the cloud.

Table of contents

Open Table of contents

Aspect of convergence edge computing and architectural composition

Ever-evolving technological landscape, the integration of Edge Computing with modular applications is redefining the way we approach software deployment, processing, and overall system efficiency. As a software engineer, I’d like to shed light on a few significant aspects of this integration:

- Modularity and Scalability: The ability to deploy only the necessary functionalities on an edge device allows for efficient resource utilization. For instance, if an edge device only needs to process video data or execute prediction model, only the modules related to video processing or execution a prediction model would be deployed.

- Latency Reduction: By combining Edge Computing with modular applications, it’s possible to process data locally and act on it immediately. For example, in a factory, a sensor detecting an anomaly in a machine can process the information locally and take immediate actions, without relying on a centralized data center.

- Security: By segmenting applications into modules, specific security protocols can be applied to each module based on its function. Furthermore, Edge Computing can limit data exposure by processing and storing information locally.

- Efficient decentralization: Edge Computing revolves around processing data close to its origin source. With modular applications, businesses can deploy tailored solutions in different locations, optimizing processing according to local needs.

Basics of architectural composition in edge environments

-

Lightweight Installation on Resource-Constrained Devices: It’s imperative to ensure that software and applications are as lightweight as possible when dealing with edge environments. Many edge devices are constrained in terms of memory, storage, and processing power. From a software engineering perspective, this means optimizing codebases, reducing overhead, and choosing efficient algorithms suitable for such environments.

-

Network Communication Optimization: Given that edge environments often involve unstable or intermittent connections, it is critical to optimize network communication to decrease latency and enhance bandwidth efficiency. This may involve implementing efficient data transfer protocols, data compression techniques, or adaptive bitrate streaming.

-

Robustness and Fault Tolerance: Due to the inherently unstable nature of edge environments, it is paramount to design systems that are robust and can recover rapidly from failures or interruptions. Architecturally, this could mean implementing failover mechanisms, redundancy, and self-healing capabilities in the system design.

-

Security at the Edge: With a myriad of devices connected at the edge, it’s essential to ensure data and communication security and safeguard against potential threats. This involves implementing secure protocols, encryption, authentication, and regular security audits.

-

Device and Platform Interoperability: Within an edge environment, one is likely to encounter multiple devices and platforms from various manufacturers. Ensuring interoperability between them is critical. Adopting standard protocols, middleware solutions, and ensuring compliance with industry standards can aid in achieving this.

-

Energy Conservation: Many edge devices operate on batteries or have limited power sources. Therefore, optimizing energy consumption is a priority. This could involve using energy-efficient algorithms, implementing duty cycling, or utilizing sleep modes effectively.

-

Remote Updates and Maintenance: Given the challenges in physically accessing all devices in an edge environment, the capability to conduct remote updates and maintenance becomes essential. Solutions might include over-the-air (OTA) updates, remote diagnostics, and monitoring capabilities.

-

Scalability: As more devices connect and generate data, the architecture must have the capability to scale to handle the increase in resource demand. This means ensuring modular design, using load balancers, and considering horizontal scalability strategies.

-

Local Storage and Caching: To decrease dependency on network connections and enhance response times, it’s advantageous to have local storage and caching capabilities on edge devices. Implementing efficient caching algorithms, using fast-access storage mediums, and periodically syncing with central databases can be effective strategies.

Practical example

I’ll walk you through a practical example in environments that demand high availability, especially in remote, resource-constrained locations or within IoT appliances. With the rise of ARM architectures in various devices, from the tiny Raspberry Pi to substantial AWS a1.4xlarge servers with 32GiB of RAM, it’s possible to deploy a composable architecture on a single device.

This example provide a hands-on demonstration with “K3s”. This isn’t just any Kubernetes distribution; it’s lightweight, efficient, and packed with features that make deployment a breeze (https://k3s.io/)

Simple example to deploy a container containing a predictive maintenance model for an industrial machinery using K3s.

apiVersion: v1

kind: Service

metadata:

name: predictive-maintenance-service

spec:

selector:

app: predictive-maintenance

ports:

- protocol: TCP

port: 80

targetPort: 8080

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: predictive-maintenance-deployment

spec:

replicas: 1

selector:

matchLabels:

app: predictive-maintenance

template:

metadata:

labels:

app: predictive-maintenance

spec:

containers:

- name: predictive-maintenance-container

image: sergicontre/predictive-maintenance-model:latest

ports:

- containerPort: 8080Utilizing the Ambassador pattern, one container can act as a proxy for external communications, managing and optimizing traffic to other containers in a K3s cluster.

This service will not talk directly to the model. Instead, it will communicate with the ambassador, and the ambassador will communicate with the model. This allows you to centralize and optimize communication, as well as perform additional tasks such as authentication, registration, and rate limiting.

---

spec:

containers:

- name: predictive-maintenance

image: sergicontre/predictive-maintenance-model:latest

ports:

- containerPort: 5000

- name: ambassador

image: ambassador-image:latest

ports:

- containerPort: 8080Resiliency and fault tolerance are essential in edge computing environments due to potentially unstable conditions. The self-healing pattern is an approach that allows an application to automatically recover from failures without human intervention.

Implement self-healing is by using multiple replicas. If an instance fails, traffic can be directed to another instance while Kubernetes takes care of restarting the failed instance.

---

spec:

replicas: 3Liveness Probes and Readiness Probes are mechanisms that Kubernetes uses to know when a container is working correctly or when it should be restarted.

Let’s say your application has a /health endpoint that returns a 200 status code when everything is working correctly. We can configure the probes in this way:

---

spec:

containers:

- name: predictive-model

image: sergicontre/predictive-maintenance-model:latest

ports:

- containerPort: 5000

livenessProbe:

httpGet:

path: /health

port: 5000

initialDelaySeconds: 30

periodSeconds: 10

readinessProbe:

httpGet:

path: /health

port: 5000

initialDelaySeconds: 30

periodSeconds: 10If the /health endpoint fails, Kubernetes will restart the container.

In the same way It is used to determine if the container is ready to receive traffic. If this probe fails, the container will not receive traffic until the probe succeeds again.